🤖 GPT-3: The Singularity is Near (?), The Story of SimCity and More

Deep dive into what is GPT-3, its capabilities and recommended reading to prepare for The Singularity

👋 Welcome to Gray Matters 🧠, a newsletter where we share interesting reflections about Tech, Software, and Productivity.

If you enjoy this and are not yet subscribed, please consider signing up. It will sincerely make our day.

#🧠 #AI #singularity

Last weekend Twitter started to get flooded with thousands of tweets showing the capabilities of GPT-3, the last evolution of OpenAI’s text prediction model. I recommend to carefully go through Joan’s links below, some of them will blow your mind. The best of all is that this is only the beginning and it is impossible to know what the possibilities are for the coming years!

So, in case you are interested in what the future can bring (I mean the “real long-term future”, not the boring next 5 years and its geopolitical changes with no impact on the global history), I must recommend you one of my favorites books ever: The Singularity is near by Ray Kurzweil.

You need to read it with patience (+800 pages) and a really, really open and positive mind as it presents some very disruptive and controversial ideas (brain upload, nanobots in your bloodstream, computronium, etc.). But after you read it, your ideas about the future will drastically change.

What a time to be alive!

— Mario

Mario's links

When SimCity got serious: the story of Maxis Business Simulations and SimRefinery Essay categorySimulation category: If you are reading this newsletter, for sure you know SimCity and I bet you played some of the Sim-saga games. But you probably didn’t know that Maxis (SimCity creators) had a division back in the early 90s to develop business simulation games, being SimRefinery one of the most famous. The article shows the story of Maxis with a special focus in SimRefinery, a game that was thought lost forever but that, thanks to the article, was sent to ArsTechnica by a former Chevron refinery employee and now is accessible to anyone. I recommend the following LGR Blerbs video on SimRefinery and its amazing story:

How e-commerce platform Elliot fell back down to Earth: Success stories are great, but a lot of lessons can be learned from epic downfalls. I really haven’t heard of Elliot before, but the story is totally captivating and totally worth reading.

Don't Publish On Medium: Medium is one of the most hated publishing platforms but is still probably the most popular and preferred due to its simplicity and the ease to reach a wide audience fast. Both Joan and I write periodically in Medium and we’ve had numerous conversations on Medium vs. Substack vs. a proprietary site and we are still not sure of which is the best option. Obviously, Medium has some limitations that Brian Balfour summarizes in his article:

You Are Building Medium's Brand, Not Your Own

You Have Little Ownership Over the Audience

Monetization Is Garbage

While I agree on the first two, I strongly disagree, at least for the moment, on the third one, as I have been able to earn a respectable figure (+$500) on certain Medium articles thanks to Medium reach.

Slack’s hazy future 🔮: Will Slack be able to survive vs Microsoft Teams and even grow in other markets like video-conferencing, productivity, and remote-teams global organization or will it fall vs Teams and all the other numerous competitors? Worth reading!

A startup I liked: Pitch. Pitch published its July blog post with some product updates and use cases. Presentations will be disrupted shortly (I hope) and, for the moment, Pitch is one of the best positioned. I am eager to receive an invitation for the beta and see if it really can replace PowerPoint!

Joan's section

#🤖 #AI #GPT-3

You’ve probably seen some Twits or articles about GPT-3, the new evolution of Open AI’s model based on a “few-shot learner” text-prediction model that been found to do much more than just predicting text.

As Gwern puts it:

[…] however, is not merely a quantitative tweak yielding “GPT-2 but better”—it is qualitatively different, exhibiting eerie runtime learning capabilities allowing even the raw model, with zero finetuning, to “meta-learn” many textual tasks purely by example or instruction. One does not train or program GPT-3 in a normal way, but one engages in dialogue and writes prompts to teach GPT-3 what one wants.

It’s been making so much noise lately because the people who have access to it (not everyone has yet), have started showing their creations to the world. And, let me tell you, they’re pretty amazing. Some examples:

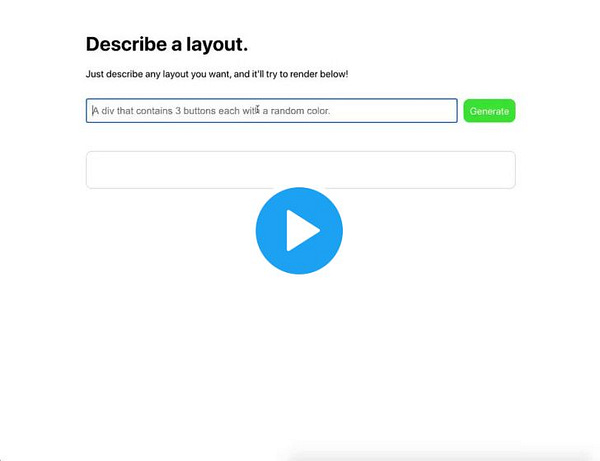

Using GPT-3 to generate Web layouts:

Using GTP-3 to explain Twits:

I am completely floored. Someone run a thought of mine through GPT-3 to expand it into an explanation of what I had in mind, and it's like 95% meaningful and 90% correct. I don't think that I have seen a human explanation of my more complicated tweets approaching this accuracy :)@Plinz I didn't quite understand everything in your tweet, so I passed it through the @OpenAI #GPT3 API. It took multiple runs and some tweaks, and I'm still not sure I can trust it entirely, but here's what it came up with. I think I understand your point now. Or have I been misled? https://t.co/X0noJX7D0v

I am completely floored. Someone run a thought of mine through GPT-3 to expand it into an explanation of what I had in mind, and it's like 95% meaningful and 90% correct. I don't think that I have seen a human explanation of my more complicated tweets approaching this accuracy :)@Plinz I didn't quite understand everything in your tweet, so I passed it through the @OpenAI #GPT3 API. It took multiple runs and some tweaks, and I'm still not sure I can trust it entirely, but here's what it came up with. I think I understand your point now. Or have I been misled? https://t.co/X0noJX7D0v Jesse Szepieniec @jessems

Jesse Szepieniec @jessems

This Dungeons & Dragons game uses GPT-3 to dynamically generate the game story on the fly, after reading each of your prompts 😵

Many more examples in this amazing Twitter thread by @xuenay.

If you are interested in learning more about how GPT-3 works, I recommend this video by Computerphile where you will see the model writing poetry and doing math without no one having thought it how to.:

I will leave you with a possibly prophetic tweet from Paul Graham

Addendum on 2020-07-23: An interesting note on how GPT-3 might not be a stepping stone towards AGI to keep us grounded on reality, via Marginal Revolution.